1: Examples of common false beliefs in mathematics (score 212191 in 2015)

Question

The first thing to say is that this is not the same as the question about interesting mathematical mistakes. I am interested about the type of false beliefs that many intelligent people have while they are learning mathematics, but quickly abandon when their mistake is pointed out – and also in why they have these beliefs. So in a sense I am interested in commonplace mathematical mistakes.

Let me give a couple of examples to show the kind of thing I mean. When teaching complex analysis, I often come across people who do not realize that they have four incompatible beliefs in their heads simultaneously. These are

- a bounded entire function is constant;

- \(\sin z\) is a bounded function;

- \(\sin z\) is defined and analytic everywhere on \(\mathbb{C}\);

- \(\sin z\) is not a constant function.

Obviously, it is (ii) that is false. I think probably many people visualize the extension of \(\sin z\) to the complex plane as a doubly periodic function, until someone points out that that is complete nonsense.

A second example is the statement that an open dense subset \(U\) of \(\mathbb{R}\) must be the whole of \(\mathbb{R}\). The “proof” of this statement is that every point \(x\) is arbitrarily close to a point \(u\) in \(U\), so when you put a small neighbourhood about \(u\) it must contain \(x\).

Since I’m asking for a good list of examples, and since it’s more like a psychological question than a mathematical one, I think I’d better make it community wiki. The properties I’d most like from examples are that they are from reasonably advanced mathematics (so I’m less interested in very elementary false statements like \((x+y)^2=x^2+y^2\), even if they are widely believed) and that the reasons they are found plausible are quite varied.

Answer accepted (score 597)

For vector spaces, \(\dim (U + V) = \dim U + \dim V - \dim (U \cap V)\), so \[ \dim(U +V + W) = \dim U + \dim V + \dim W - \dim (U \cap V) - \dim (U \cap W) - \dim (V \cap W) + \dim(U \cap V \cap W), \] right?

Answer 2 (score 325)

Everyone knows that for any two square matrices \(A\) and \(B\) (with coefficients in a commutative ring) that \[\operatorname{tr}(AB) = \operatorname{tr}(BA).\]

I once thought that this implied (via induction) that the trace of a product of any finite number of matrices was independent of the order they are multiplied.

Answer 3 (score 296)

Many students believe that 1 plus the product of the first \(n\) primes is always a prime number. They have misunderstood the contradiction in Euclid’s proof that there are infinitely many primes. (By the way, \(2 \cdot 3 \cdot 5 \cdot 7 \cdot 11 \cdot 13 + 1\) is not prime and there are many other such examples.)

Much later edit: As pointed out elsewhere in this thread, Euclid’s proof is not by contradiction; that is another widespread false belief.

Much much later edit: Euclid’s proof is not not by contradiction. This is another very widespread false belief. It depends on personal opinion and interpretation what a proof by contradiction is and whether Euclid’s proof belongs to this category. In fact, if the derivation of an absurdity or the contradiction of an assumption is a proof by contradiction, then Euclid’s proof is a proof by contradiction. Euclid says (Elements Book 9 Proposition 20): The very thing (is) absurd. Thus, G is not the same as one of A, B, C. And it was assumed (to be) prime.

Nb. The above edits were not added by the OP of this answer.

Edit on 24 July 2017: Euclid’s proof was not by contradiction, but contains a small lemma in the middle of it that is proved by contradiction. The proof shows that if \(S\) is any finite set of primes (not assumed to be the set of all primes) then the prime factors of \(1+\prod S\) are not in \(S\), so there is at least one more prime than those in \(S.\) The proof that \(\prod\) and \(1+\prod\) have no common factors is the part that is by contradiction. All of this is shown in the following paper: M. Hardy and C. Woodgold, “Prime simplicity”, Mathematical Intelligencer 31 (2009), 44–52.

2: The factorial of -1, -2, -3, (score 185397 in 2018)

Question

Well, \(n!\) is for integer \(n < 0\) not defined — as yet.

So the question is:

How could a sensible generalization of the factorial for negative integers look like?

Clearly a good generalization should have a clear combinatorial meaning which combines well with the nonnegative case.

Answer accepted (score 45)

It’s not that it’s not defined… Actually it has been defined more than it should have. There are plenty of functions that interpolate the factorials, some of them extend to the negative integers as well. Hadamard’s Gamma function is entire, logarithmic single inflected factorial function is another example. But on the other hand, for some mysterious reason, the nice property that we want an extension of the factorial to enjoy is log-convexity. The Bohr-Mollerup-Artin Theorem tells us that the only function which is logarithmically convex on the positive real line and satisfies \(f(z)=zf(z-1)\) there (also \(f(1)=1\) and \(f(z)>0\)) is the Gamma function. Unfortunately the gamma function doesn’t extend to negative integers, and that is why I guess people don’t really care that much for defining them as they know that no “good” answer can be found.

Answer 2 (score 26)

I think it’s worth pointing out here that, if \(a\ge0\), then, near z = -a, we have

\[ \Gamma(z) = (-1)^a {1 \over a!} {1 \over {z+a}} + O(1) \]

and so it might be tempting to say that, in some sense,

\[ \Gamma(-a) = (-1)^a {1 \over a!} \infty \]

where the symbol \(\infty\) represents the rate at which \(\Gamma\) blows up near the pole at \(a = 0\). That is, \(\Gamma(0) = \infty, \Gamma(-1) = -\infty, \Gamma(-2) = \infty/2, \Gamma(-3) = -\infty/6\), and so on.

In particular, this interpretation might work in some formula in which \(\Gamma\) evaluated at nonpositive integers appears in both the numerator and the denominator, and the symbol \(\infty\) can be canceled to yield a real number.

Answer 3 (score 12)

For a related paper see D. Loeb, Sets with a negative number of elements, Adv. Math. 91 (1992), 64–74.

3: Too old for advanced mathematics? (score 168920 in 2014)

Question

Kind of an odd question, perhaps, so I apologize in advance if it is inappropriate for this forum. I’ve never taken a mathematics course since high school, and didn’t complete college. However, several years ago I was affected by a serious illness and ended up temporarily disabled. I worked in the music business, and to help pass the time during my convalescence I picked up a book on musical acoustics.

That book reintroduced me to calculus with which I’d had a fleeting encounter with during high school, so to understand what I was reading I figured I needed to brush up, so I picked up a copy of Stewart’s “Calculus”. Eventually I spent more time working through that book than on the original text. I also got a copy of “Differential Equations” by Edwards and Penny after I had learned enough calculus to understand that. I’ve also been learning linear algebra - MIT’s lectures and problem sets have really helped in this area. I’m fascinated with the mathematics of the Fourier transform, particularly its application to music in the form of the DFT and DSP - I’ve enjoyed the lectures that Stanford has available on the topic immensely. I just picked up a little book called “Introduction To Bessel Functions” by Frank Bowman that I’m looking forward to reading.

The difficulty is, I’m 30 years old, and I can tell that I’m a lot slower at this than I would have been if I had studied it at age 18. I’m also starting to feel that I’m getting into material that is going to be very difficult to learn without structure or some kind of instruction - like I’ve picked all the low-hanging fruit and that I’m at the point of diminishing returns. I am fortunate though, that after a lot of time and some great MDs my illness is mostly under control and I now have to decide what to do with “what comes after.”

I feel a great deal of regret, though, that I didn’t discover that I enjoyed this discipline until it was probably too late to make any difference. I am able, however, to return to college now if I so choose.

The questions I’d like opinions on are these: is returning to school at my age for science or mathematics possible? Is it worth it? I’ve had a lot of difficulty finding any examples of people who have gotten their first degrees in science or mathematics at my age. Do such people exist? Or is this avenue essentially forever closed beyond a certain point? If anyone is familiar with older first-time students in mathematics or science - how do they fare?

Answer accepted (score 86)

This is indeed not a typical math overflow question, but never mind that.

Of course you can learn mathematics at the age of 30 after having stopped studying it at the age of 18! Examples are abundant – in almost every math department I’ve ever been in, there are at least one or two older graduate students that took some years off (after high school, after college or both) and did quite well upon their return.

Being older than 18 may not be a bad thing. Many 18 year-olds are neither well-prepared nor well-motivated to study mathematics (or something else) at the university level: a lot of them are there because their parents want them to be, and most of them are there because their parents are paying.

It is true that essential skills get rusty after years of disuse – when I teach “freshman calculus”, older students often do not do very well, even if “older” means 21 or 22: they’ve forgotten too much precalculus mathematics. But you have been learning about calculus, differential equations and linear algebra on your own and enjoying it! You’re looking forward to reading a book on Bessel functions!! You’re well past the point where older, rusty students have trouble. You can do it, for sure, and it sounds like you want to, so you should.

By the way, 30 is not remotely old. I am a few years older and I think better and more quickly now than I did when I was your age.

Answer 2 (score 53)

Dear bitrex: your enthusiasm is heart-warming!

I have had students much older than you and they have always been a joy to teach: their maturity more than compensated for their potential knowledge-gaps and they fared very well on their exams.

The nicest success story is a professional cellist who didn’t even have the “baccalauréat”, a French diploma for the end of secondary school usually taken at age 18. He started learning math because he had married a math teacher (!) and became an excellent student. He passed his D.E.A. (a sort of undergraduate thesis) brilliantly and unfortunately couldn’t accept my suggestion to do a Ph.D. because of his professional activity ( I sometimes hear him at concerts…).

So my advice is to go on with your mathematics: I can’t predict the future but my feeling is that your age is not very relevant. Good luck!

Answer 3 (score 52)

With all this unanimous enthusiasm, I can’t help but add a cautionary note. I will say, however, that what I’m about to say applies to anyone of any age trying to get a Ph.D. and pursue a career as an academic mathematician.

If you think you want a Ph.D. in mathematics, you should first try your best to talk yourself out of it. It’s a little like aspiring to be a pro athlete. Even under the best of circumstances, the chances are too high that you’ll end up in a not-very-well-paying job in a not-very-attractive geographic location. Or, if you insist on living in, say, New York, you may end up teaching as an adjunct at several different places.

Someone with your mathematical talents and skills can often find much more rewarding careers elsewhere.

You should pursue the Ph.D. only if you love learning, doing, and teaching mathematics so much that you can’t bear the thought of doing anything else, so you’re willing to live with the consequences of trying to make a living with one. Or you have an exit strategy should things not work out.

Having said all that, I have a story. When I was at Rice in the mid 80’s, a guy in his 40’s or 50’s came to the math department and told us he really wanted to become a college math teacher. He had always loved math but went into sales(!) and had a very successful career. With enough money stashed away, he wanted to switch to a career in math. To put it mildly, we were really skeptical, mostly because he had the overly cheery outgoing personality of a salesman and therefore was completely unlike anyone else in the math department. It was unthinkable that someone like that could be serious about math. Anyway, we warned him that his goal was probably unrealistic but he was welcome to try taking our undergraduate math curriculum to prepare. Not surprisingly, he found this rather painful, but eventually to our amazement he started to do well in our courses, including all the proofs in analysis. By the end, we told the guy that we thought he really had a shot at getting a Ph.D. and have a modest career as a college math teacher. He thanked us but told us that he had changed his mind. As much as he loved doing the math, it was a solitary struggle and took too much of his time away from his family and friends. In the end, he chose them over a career in math (which of course was a rather shocking choice to us).

So if you really want to do math and can afford to live with the consequences, by all means go for it.

4: Mathematical “urban legends” (score 164396 in 2011)

Question

When I was a young and impressionable graduate student at Princeton, we scared each other with the story of a Final Public Oral, where Jack Milnor was dragged in against his will to sit on a committee, and noted that the class of topological spaces discussed by the speaker consisted of finite spaces. I had assumed this was an “urban legend”, but then at a cocktail party, I mentioned this to a faculty member, who turned crimson and said that this was one of his students, who never talked to him, and then had to write another thesis (in numerical analysis, which was not very highly regarded at Princeton at the time). But now, I have talked to a couple of topologists who should have been there at the time of the event, and they told me that this was an urban legend at their time as well, so maybe the faculty member was pulling my leg.

So, the questions are: (a) any direct evidence for or against this particular disaster? (b) what stories kept you awake at night as a graduate student, and is there any evidence for or against their truth?

EDIT (this is unrelated, but I don’t want to answer my own question too many times): At Princeton, there was supposedly an FPO in Physics, on some sort of statistical mechanics, and the constant \(k\) appeared many times. The student was asked:

Examiner: What is \(k?\)

Student: Boltzmann’s constant.

Examiner: Yes, but what is the value?

Student: Gee, I don’t know…

Examiner: OK, order of magnitude?

Student: Umm, don’t know, I just know \(k\dots\)

The student was failed, since he was obviously not a physicist.

Answer accepted (score 193)

This happened just last year, but it certainly deserves to be included in the annals of mathematical legends:

A graduate student (let’s call him Saeed) is in the airport standing in a security line. He is coming back from a conference, where he presented some exciting results of his Ph.D. thesis in Algebraic Geometry. One of the people whom he met at his presentation (let’s call him Vikram) is also in the line, and they start talking excitedly about the results, and in particular the clever solution to problem X via blowing up eight points on a plane.

They don’t notice other travelers slowly backing away from them.

Less than a minute later, the TSA officers descend on the two mathematicians, and take them away. They are thoroughly and intimately searched, and separated for interrogation. For an hour, the interrogation gets nowhere: the mathematicians simply don’t know what the interrogators are talking about. What bombs? What plot? What terrorism?

The student finally realizes the problem, pulls out a pre-print of his paper, and proceeds to explain to the interrogators exactly what “blowing up points on a plane” means in Algebraic Geometry.

Answer 2 (score 147)

Since this has become a free-for-all, allow me to share an anecdote that I wouldn’t quite believe if I hadn’t seen it myself.

I attended graduate school in Connecticut, where seminars proceeded with New England gentility, very few questions coming from the audience even at the end. But my advisor Fred Linton would take me down to New York each week to attend Eilenberg’s category theory seminars at Columbia. These affairs would go on for hours with many interruptions, particularly from Sammy who would object to anything said in less than what he regarded as the optimal way. Now Fred had a tendency to doze off during talks. One particular week a well-known category theorist (but I’ll omit his name) was presenting some of his new results, and Sammy was giving him a very hard time. He kept saying “draw the right diagram, draw the right diagram.” Sammy didn’t know what diagram he wanted and he rejected half a dozen attempts by the speaker, and then at least an equal number from the audience. Finally, when it all seemed a total impasse, Sammy, after a weighty pause said “Someone, wake up Fred.” So someone tapped Fred on the shoulder, he blinked his eyes and Sammy said, in more measured tones than before, “Fred, draw the right diagram.” Fred looked up at the board, walked up, drew the right diagram, returned to his chair, and promptly went back to sleep. And so the talk continued.

Thank you all for your indulgence - I’ve always wanted to see that story preserved for posterity and now I have.

Answer 3 (score 135)

Here’s another great one: a certain well known mathematican, we’ll call him Professor P.T. (these are not his initials…), upon his arrival at Harvard University, was scheduled to teach Math 1a (the first semester of freshman calculus.) He asked his fellow faculty members what he was supposed to teach in this course, and they told him: limits, continuity, differentiability, and a little bit of indefinite integration.

The next day he came back and asked, “What am I supposed to cover in the second lecture?”

5: Proofs without words (score 149973 in 2017)

Question

Can you give examples of proofs without words? In particular, can you give examples of proofs without words for non-trivial results?

(One could ask if this is of interest to mathematicians, and I would say yes, in so far as the kind of little gems that usually fall under the title of ‘proofs without words’ is quite capable of providing the aesthetic rush we all so professionally appreciate. That is why we will sometimes stubbornly stare at one of these mathematical autostereograms with determination until we joyously see it.)

(I’ll provide an answer as an example of what I have in mind in a second)

Answer accepted (score 481)

A proof of the identity \[1+2+\cdots + (n-1) = \binom{n}{2}\]

(Adapted from an entry I saw at Wolfram Demonstrations, see also the original faster animation)

This proof was discovered by Loren Larson, professor emeritus at St. Olaf College. He included it along with a number of other, more standard, proofs, in “A Discrete Look at 1+2+…+n,” published in 1985 in The College Mathematics Journal (vol. 16, no. 5, pp. 369-382, DOI: 10.1080/07468342.1985.11972910, JSTOR).

Answer 2 (score 218)

Because I think proof by picture is potentially dangerous, I’ll present a link to the standard proof that 32.5 = 31.5:

An animation of the above is:

(This work has been released into the public domain by its author, Trekky0623 at English Wikipedia. This applies worldwide.)

There does not seem to be any necessity for the particular ‘path in the relevant configuration space’ that was used by the author of the above animated gif. This may be seen as an argument against including an animation.

Answer 3 (score 167)

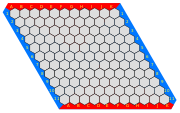

The cardinality of the real number line is the same as a finite open interval of the real number line.

6: Intuitive crutches for higher dimensional thinking (score 128841 in 2016)

Question

I once heard a joke (not a great one I’ll admit…) about higher dimensional thinking that went as follows-

An engineer, a physicist, and a mathematician are discussing how to visualise four dimensions:

Engineer: I never really get it

Physicist: Oh it’s really easy, just imagine three dimensional space over a time- that adds your fourth dimension.

Mathematician: No, it’s way easier than that; just imagine \(\mathbb{R}^n\) then set n equal to 4.

Now, if you’ve ever come across anything manifestly four dimensional (as opposed to 3+1 dimensional) like the linking of 2 spheres, it becomes fairly clear that what the physicist is saying doesn’t cut the mustard- or, at least, needs some more elaboration as it stands.

The mathematician’s answer is abstruse by the design of the joke but, modulo a few charts and bounding 3-folds, it certainly seems to be the dominant perspective- at least in published papers. The situation brings to mind the old Von Neumann quote about “…you never understand things. You just get used to them”, and perhaps that really is the best you can do in this situation.

But one of the principal reasons for my interest in geometry is the additional intuition one gets from being in a space a little like one’s own and it would be a shame to lose that so sharply, in the way that the engineer does, in going beyond 3 dimensions.

What I am looking for, from this uncountably wise and better experienced than I community of mathematicians, is a crutch- anything that makes it easier to see, for example, the linking of spheres- be that simple tricks, useful articles or esoteric (but, hopefully, ultimately useful) motivational diagrams: anything to help me be better than the engineer.

Community wiki rules apply- one idea per post etc.

Answer accepted (score 125)

I can’t help you much with high-dimensional topology - it’s not my field, and I’ve not picked up the various tricks topologists use to get a grip on the subject - but when dealing with the geometry of high-dimensional (or infinite-dimensional) vector spaces such as \(\mathbb R^n\), there are plenty of ways to conceptualise these spaces that do not require visualising more than three dimensions directly.

For instance, one can view a high-dimensional vector space as a state space for a system with many degrees of freedom. A megapixel image, for instance, is a point in a million-dimensional vector space; by varying the image, one can explore the space, and various subsets of this space correspond to various classes of images.

One can similarly interpret sound waves, a box of gases, an ecosystem, a voting population, a stream of digital data, trials of random variables, the results of a statistical survey, a probabilistic strategy in a two-player game, and many other concrete objects as states in a high-dimensional vector space, and various basic concepts such as convexity, distance, linearity, change of variables, orthogonality, or inner product can have very natural meanings in some of these models (though not in all).

It can take a bit of both theory and practice to merge one’s intuition for these things with one’s spatial intuition for vectors and vector spaces, but it can be done eventually (much as after one has enough exposure to measure theory, one can start merging one’s intuition regarding cardinality, mass, length, volume, probability, cost, charge, and any number of other “real-life” measures).

For instance, the fact that most of the mass of a unit ball in high dimensions lurks near the boundary of the ball can be interpreted as a manifestation of the law of large numbers, using the interpretation of a high-dimensional vector space as the state space for a large number of trials of a random variable.

More generally, many facts about low-dimensional projections or slices of high-dimensional objects can be viewed from a probabilistic, statistical, or signal processing perspective.

Answer 2 (score 89)

Here are some of the crutches I’ve relied on. (Admittedly, my crutches are probably much more useful for theoretical computer science, combinatorics, and probability than they are for geometry, topology, or physics. On a related note, I personally have a much easier time thinking about \(R^n\) than about, say, \(R^4\) or \(R^5\)!)

-

If you’re trying to visualize some 4D phenomenon P, first think of a related 3D phenomenon P’, and then imagine yourself as a 2D being who’s trying to visualize P’. The advantage is that, unlike with the 4D vs. 3D case, you yourself can easily switch between the 3D and 2D perspectives, and can therefore get a sense of exactly what information is being lost when you drop a dimension. (You could call this the “Flatland trick,” after the most famous literary work to rely on it.)

-

As someone else mentioned, discretize! Instead of thinking about \(R^n\), think about the Boolean hypercube \(\lbrace 0,1 \rbrace ^n\), which is finite and usually easier to get intuition about. (When working on problems, I often find myself drawing \(\lbrace 0,1 \rbrace ^4\) on a sheet of paper by drawing two copies of \(\lbrace 0,1 \rbrace ^3\) and then connecting the corresponding vertices.)

-

Instead of thinking about a subset \(S \subseteq R^n\), think about its characteristic function \(f : R^n \rightarrow \lbrace 0,1 \rbrace\). I don’t know why that trivial perspective switch makes such a big difference, but it does … maybe because it shifts your attention to the process of computing \(f\), and makes you forget about the hopeless task of visualizing S!

-

One of the central facts about \(R^n\) is that, while it has “room” for only \(n\) orthogonal vectors, it has room for \(\exp(n)\) almost-orthogonal vectors. Internalize that one fact, and so many other properties of \(R^n\) (for example, that the \(n\)-sphere resembles a “ball with spikes sticking out,” as someone mentioned before) will suddenly seem non-mysterious. In turn, one way to internalize the fact that \(R^n\) has so many almost-orthogonal vectors is to internalize Shannon’s theorem that there exist good error-correcting codes.

-

To get a feel for some high-dimensional object, ask questions about the behavior of a process that takes place on that object. For example: if I drop a ball here, which local minimum will it settle into? How long does this random walk on \(\lbrace 0,1 \rbrace ^n\) take to mix?

Answer 3 (score 52)

This is a slightly different point, but Vitali Milman, who works in high-dimensional convexity, likes to draw high-dimensional convex bodies in a non-convex way. This is to convey the point that if you take the convex hull of a few points on the unit sphere of R^n, then for large n very little of the measure of the convex body is anywhere near the corners, so in a certain sense the body is a bit like a small sphere with long thin “spikes”.

7: Philosophy behind Mochizuki’s work on the ABC conjecture (score 127623 in 2013)

Question

Mochizuki has recently announced a proof of the ABC conjecture. It is far too early to judge its correctness, but it builds on many years of work by him. Can someone briefly explain the philosophy behind his work and comment on why it might be expected to shed light on questions like the ABC conjecture?

Answer accepted (score 172)

I would have preferred not to comment seriously on Mochizuki’s work before much more thought had gone into the very basics, but judging from the internet activity, there appears to be much interest in this subject, especially from young people. It would obviously be very nice if they were to engage with this circle of ideas, regardless of the eventual status of the main result of interest. That is to say, the current sense of urgency to understand something seems generally a good thing. So I thought I’d give the flimsiest bit of introduction imaginable at this stage. On the other hand, as with many of my answers, there’s the danger I’m just regurgitating common knowlege in a long-winded fashion, in which case, I apologize.

For anyone who wants to really get going, I recommend as starting point some familiarity with two papers, ‘The Hodge-Arakelov theory of elliptic curves (HAT)’ and ‘The Galois-theoretic Kodaira-Spencer morphism of an elliptic curve (GTKS).’ [It has been noted here and there that the ‘Survey of Hodge Arakelov Theory I,II’ papers might be reasonable alternatives.][I’ve just examined them again, and they really might be the better way to begin.] These papers depart rather little from familiar language, are essential prerequisites for the current series on IUTT, and will take you a long way towards a grasp at least of the motivation behind Mochizuki’s imposing collected works. This was the impression I had from conversations six years ago, and then Mochizuki himself just pointed me to page 10 of IUTT I, where exactly this is explained. The goal of the present answer is to decipher just a little bit those few paragraphs.

The beginning of the investigation is indeed the function field case (over \(\mathbb{C}\), for simplicity), where one is given a family \[f:E \rightarrow B\] of elliptic curves over a compact base, best assumed to be semi-stable and non-isotrivial. There is an exact sequence \[0\rightarrow \omega_E \rightarrow H^1_{DR}(E) \rightarrow H^1(O_E)\rightarrow0,\] which is moved by the logarithmic Gauss-Manin connection of the family. (I hope I will be forgiven for using standard and non-optimal notation without explanation in this note.) That is to say, if \(S\subset B\) is the finite set of images of the bad fibers, there is a log connection \[H^1_{DR}(E) \rightarrow H^1_{DR}(E) \otimes \Omega_B(S),\] which does not preserve \(\omega_E\). This fact is crucial, since it leads to an \(O_B\)-linear Kodaira-Spencer map \[KS:\omega \rightarrow H^1(O_E)\otimes \Omega_B(S),\] and thence to a non-trivial map \[\omega_E^2\rightarrow \Omega_B(S).\] From this, one easily deduces Szpiro’s inequality: \[\deg (\omega_E) \leq (1/2)( 2g_B-2+|S|).\] At the most simple-minded level, one could say that Mochizuki’s programme has been concerned with replicating this argument over a number field \(F\). Since it has to do with differentiation on \(B\), which eventually turns into \(O_F\), some philosophical connection to \(\mathbb{F}_1\)-theory begins to appear. I will carry on using the same notation as above, except now \(B=Spec(O_F)\).

A large part of HAT is exactly concerned with the set-up necessary to implement this idea, where, roughly speaking, the Galois action has to play the role of the GM connection. Obviously, \(G_F\) doesn’t act on \(H^1_{DR}(E)\). But it does act on \(H^1_{et}(\bar{E})\) with various coefficients. The comparison between these two structures is the subject of \(p\)-adic Hodge theory, which sadly works only over local fields rather than a global one. But Mochizuki noted long ago that something like \(p\)-adic Hodge theory should be a key ingredient because over \(\mathbb{C}\), the comparison isomorphism \[H^1_{DR}(E)\simeq H^1(E(\mathbb{C}), \mathbb{Z})\otimes_{\mathbb{Z}} O_B\] allows us to completely recover the GM connection by the condition that the topological cohomology generates the flat sections.

In order to get a global arithmetic analogue, Mochizuki has to formulate a discrete non-linear version of the comparison isomorphism. What is non-linear? This is the replacement of \(H^1_{DR}\) by the universal extension \[E^{\dagger}\rightarrow E,\] (the moduli space of line bundles with flat connection on \(E\)) whose tangent space is \(H^1_{DR}\) (considerations of this nature already come up in usual p-adic Hodge theory). What is discrete is the 'etale cohomology, which will just be \(E[\ell]\) with global Galois action, where \(\ell\) can eventually be large, on the order of the height of \(E\) (that is \(\deg (\omega_E)\)). The comparison isomorphism in this context takes the following form: \[\Xi: A_{DR}=\Gamma(E^{\dagger}, L)^{<\ell}\simeq L|E[\ell]\simeq (L|e_{E})\otimes O_{E[\ell]}.\] (I apologize for using the notation \(A_{DR}\) for the space that Mochizuki denotes by a calligraphic \(H\). I can’t seem to write calligraphic characters here.) Here, \(L\) is a suitably chosen line bundle of degree \(\ell\) on \(E\), which can then be pulled back to \(E^{\dagger}\). The inequality refers to the polynomial degree in the fiber direction of \(E^{\dagger} \rightarrow E\). The isomorphism is effected via evaluation of sections at \[E^{\dagger}[\ell]\simeq E[\ell].\] Finally, \[ L|E[\ell]\simeq (L|e_{E})\otimes O_{E[\ell]}\] comes from Mumford’s theory of theta functions. The interpretation of the statement is that it gives an isomorphism between the space of functions of some bounded fiber degree on non-linear De Rham cohomology and the space of functions on discrete 'etale cohomology. This kind of statement is entirely due to Mochizuki. One sometimes speaks of \(p\)-adic Hodge theory with finite coefficients, but that refers to a theory that is not only local, but deals with linear De Rham cohomology with finite coefficients.

Now for some corrections: As stated, the isomorphism is not true, and must be modified at the places of bad reduction, the places dividing \(\ell\), and the infinite places. This correction takes up a substantial portion of the HAT paper. That is, the isomorphism is generically true over \(B\), but to make it true everywhere, the integral structures must be modified in subtle and highly interesting ways, while one must consider also a comparison of metrics, since these will obviously figure in an arithmetic analogue of Szpiro’s conjecture. The correction at the finite bad places can be interpreted via coordinates near infinity on the moduli stack of elliptic curves as the subtle phenomenon that Mochizuki refers to as ‘Gaussian poles’ (in the coordinate \(q\)). Since this is a superficial introduction, suffice it to say for now that these Gaussian poles end up being a major obstruction in this portion of Mochizuki’s theory.

In spite of this, it is worthwhile giving at least a small flavor of Mochizuki’s Galois-theoretic KS map. The point is that \(A_{DR}\) has a Hodge filtration defined by

$F^rA_{DR}= (E^{}, L)^{ < r} $

(the direction is unconventional), and this is moved around by the Galois action induced by the comparison isomorphism. So one gets thereby a map \[G_F\rightarrow Fil (A_{DR})\] into some space of filtrations on \(A_{DR}\). This is, in essence, the Galois-theoretic KS map. That, is if we consider the equivalence over \(\mathbb{C}\) of \(\pi_1\)-actions and connections, the usual KS map measures the extent to which the GM connection moves around the Hodge filtration. Here, we are measuring the same kind of motion for the \(G_F\)-action.

This is already very nice, but now comes a very important variant, essential for understanding the motivation behind the IUTT papers. In the paper GTKS, Mochizuki modified this map, producing instead a ‘Lagrangian’ version. That is, he assumed the existence of a Lagrangian Galois-stable subspace \(G^{\mu}\subset E[l]\) giving rise to another isomorphism \[\Xi^{Lag}:A_{DR}^{H}\simeq L\otimes O_{G^{\mu}},\] where \(H\) is a Lagrangian complement to \(G^{\mu}\), which I believe does not itself need to be Galois stable. \(H\) is acting on the space of sections, again via Mumford’s theory. This can be used to get another KS morphism to filtrations on \(A_{DR}^{H}\). But the key point is that

\(\Xi^{Lag}\), in contrast to \(\Xi\), is free of the Gaussian poles

via an argument I can’t quite remember (If I ever knew).

At this point, it might be reasonable to see if \(\Xi^{Lag}\) contributes towards a version of Szpiro’s inequality (after much work and interpretation), except for one small problem. A subspace like \(G^{\mu}\) has no reason to exist in general. This is why GTKS is mostly about the universal elliptic curve over a formal completion near \(\infty\) on the moduli stack of elliptic curves, where such a space does exists. What Mochizuki explains on IUTT page 10 is exactly that the scheme-theoretic motivation for IUG was to enable the move to a single elliptic curve over \(B=Spec(O_F)\), via the intermediate case of an elliptic curve ‘in general position’.

To repeat:

A good ‘nonsingular’ theory of the KS map over number fields requires a global Galois invariant Lagrangian subspace \(G^{\mu}\subset E[l]\).

One naive thought might just be to change base to the field generated by the \(\ell\)-torsion, except one would then lose the Galois action one was hoping to use. (Remember that Szpiro’s inequality is supposed to come from moving the Hodge filtration inside De Rham cohomology.) On the other hand, such a subspace does often exist locally, for example, at a place of bad reduction. So one might ask if there is a way to globally extend such local subspaces.

It seems to me that this is one of the key things going on in the IUTT papers I-IV. As he say in loc. cit. he works with various categories of collections of local objects that simulate global objects. It is crucial in this process that many of the usual scheme-theoretic objects, local or global, are encoded as suitable categories with a rich and precise combinatorial structure. The details here get very complicated, the encoding of a scheme into an associated Galois category of finite 'etale covers being merely the trivial case. For example, when one would like to encode the Archimedean data coming from an arithmetic scheme (which again, will clearly be necessary for Szpiro’s conjecture), the attempt to come up with a category of about the same order of complexity as a Galois category gives rise to the notion of a Frobenioid. Since these play quite a central role in Mochizuki’s theory, I will quote briefly from his first Frobenioid paper:

‘Frobenioids provide a single framework [cf. the notion of a “Galois category”; the role of monoids in log geometry] that allows one to capture the essential aspects of both the Galois and the divisor theory of number fields, on the one hand, and function fields, on the other, in such a way that one may continue to work with, for instance, global degrees of arithmetic line bundles on a number field, but which also exhibits the new phenomenon [not present in the classical theory of number fields] of a “Frobenius endomorphism” of the Frobenioid associated to a number field.’

I believe the Frobenioid associated to a number field is something close to the finite 'etale covers of \(Spec(O_F)\) (equipped with some log structure) together with metrized line bundles on them, although it’s probably more complicated. The Frobenious endomorphism for a prime \(p\) is then something like the functor that just raises line bundles to the \(p\)-th power. This is a functor that would come from a map of schemes if we were working in characteristic \(p\), but obviously not in characteristic zero. But this is part of the reason to start encoding in categories:

We get more morphisms and equivalences.

Some of you will notice at this point the analogy to developments in algebraic geometry where varieties are encoded in categories, such as the derived category of coherent sheaves. There as well, one has reconstruction theorems of the Orlov type, as well as the phenomenon of non-geometric morphisms of the categories (say actions of braid groups). Non-geometric morphisms appear to be very important in Mochizuki’s theory, such as the Frobenius above, which allows us to simulate characteristic \(p\) geometry in characteristic zero. Another important illustrative example is a non-geometric isomorphism between Galois groups of local fields (which can’t exist for global fields because of the Neukirch-Uchida theorem). In fact, I think Mochizuki was rather fond of Ihara’s comment that the positive proof of the anabelian conjecture was somewhat of a disappointment, since it destroys the possibility that encoding curves into their fundamental groups will give rise to a richer category. Anyways, I believe the importance of non-geometric maps of categories encoding rather conventional objects is that

they allow us to glue together several standard categories in nonstandard ways.

Obviously, to play this game well, some things need to be encoded in rigid ways, while others should have more flexible encodings.

For a very simple example that gives just a bare glimpse of the general theory, you might consider a category of pairs \[(G,F),\] where \(G\) is a profinite topological group of a certain type and \(F\) is a filtration on \(G\). It’s possible to write down explicit conditions that ensure that \(G\) is the Galois group of a local field and \(F\) is its ramification filtration in the upper numbering (actually, now I think about it, I’m not sure about ‘explicit conditions’ for the filtration part, but anyways). Furthermore, it is a theorem of Mochizuki and Abrashkin that the functor that takes a local field to the corresponding pair is fully faithful. So now, you can consider triples \[(G,F_1, F_2),\] where \(G\) is a group and the \(F_i\) are two filtrations of the right type. If \(F_1=F_2\), then this ‘is’ just a local field. But now you can have objects with \(F_1\neq F_2\), that correspond to strange amalgams of two local fields.

As another example, one might take a usual global object, such as \[ (E, O_F, E[l], V)\] (where \(V\) denotes a collection of valuations of \(F(E[l])\) that restrict bijectively to the valuations \(V_0\) of \(F\)), and associate to it a collection of local categories indexed by \(V_0\) (something like Frobenioids corresponding to the \(E_v\) for \(v\in V_0\)). One can then try to glue them together in non-standard ways along sub-categories, after performing a number of non-standard transformations. My rough impression at the moment is that the ‘Hodge theatres’ arise in this fashion. [This is undoubtedly a gross oversimplification, which I will correct in later amendments.] You might further imagine that some construction of this sort will eventually retain the data necessary to get the height of \(E\), but also have data corresponding to the \(G^{\mu}\), necessary for the Lagrangian KS map. In any case, I hope you can appreciate that a good deal of ‘dismantling’ and ‘reconstructing,’ what Mochizuki calls surgery, will be necessary.

I can’t emphasize enough times that much of what I write is based on faulty memory and guesswork. At best, it is superficial, while at worst, it is (not even) wrong. [In particular, I am no longer sure that the GTKS map is used in an entirely direct fashion.] I have not yet done anything with the current papers than give them a cursory glance. If I figure out more in the coming weeks, I will make corrections. But in the meanwhile, I do hope what I wrote here is mostly more helpful than misleading.

Allow me to make one remark about set theory, about which I know next to nothing. Even with more straightforward papers in arithmetic geometry, the question sometimes arises about Grothendieck’s universe axiom, mostly because universes appear to be used in SGA4. Usually, number-theorists (like me) neither understand, nor care about such foundational matters, and questions about them are normally met with a shrug. The conventional wisdom of course is that any of the usual theorems and proofs involving Grothendieck cohomology theories or topoi do not actually rely on the existence of universes, except general laziness allows us to insert some reference that eventually follows a trail back to SGA4. However, this doesn’t seem to be the case with Mochizuki’s paper. That is, universes and interactions between them seem to be important actors rather than conveniences. How this is really brought about, and whether more than the universe axiom is necessary for the arguments, I really don’t understand enough yet to say. In any case, for a number-theorist or an algebraic geometer, I would guess it’s still prudent to acquire a reasonable feel for the ‘usual’ background and motivation (that is, HAT, GTKS, and anabelian things) before worrying too much about deeper issues of set theory.

Answer 2 (score 172)

I would have preferred not to comment seriously on Mochizuki’s work before much more thought had gone into the very basics, but judging from the internet activity, there appears to be much interest in this subject, especially from young people. It would obviously be very nice if they were to engage with this circle of ideas, regardless of the eventual status of the main result of interest. That is to say, the current sense of urgency to understand something seems generally a good thing. So I thought I’d give the flimsiest bit of introduction imaginable at this stage. On the other hand, as with many of my answers, there’s the danger I’m just regurgitating common knowlege in a long-winded fashion, in which case, I apologize.

For anyone who wants to really get going, I recommend as starting point some familiarity with two papers, ‘The Hodge-Arakelov theory of elliptic curves (HAT)’ and ‘The Galois-theoretic Kodaira-Spencer morphism of an elliptic curve (GTKS).’ [It has been noted here and there that the ‘Survey of Hodge Arakelov Theory I,II’ papers might be reasonable alternatives.][I’ve just examined them again, and they really might be the better way to begin.] These papers depart rather little from familiar language, are essential prerequisites for the current series on IUTT, and will take you a long way towards a grasp at least of the motivation behind Mochizuki’s imposing collected works. This was the impression I had from conversations six years ago, and then Mochizuki himself just pointed me to page 10 of IUTT I, where exactly this is explained. The goal of the present answer is to decipher just a little bit those few paragraphs.

The beginning of the investigation is indeed the function field case (over \(\mathbb{C}\), for simplicity), where one is given a family \[f:E \rightarrow B\] of elliptic curves over a compact base, best assumed to be semi-stable and non-isotrivial. There is an exact sequence \[0\rightarrow \omega_E \rightarrow H^1_{DR}(E) \rightarrow H^1(O_E)\rightarrow0,\] which is moved by the logarithmic Gauss-Manin connection of the family. (I hope I will be forgiven for using standard and non-optimal notation without explanation in this note.) That is to say, if \(S\subset B\) is the finite set of images of the bad fibers, there is a log connection \[H^1_{DR}(E) \rightarrow H^1_{DR}(E) \otimes \Omega_B(S),\] which does not preserve \(\omega_E\). This fact is crucial, since it leads to an \(O_B\)-linear Kodaira-Spencer map \[KS:\omega \rightarrow H^1(O_E)\otimes \Omega_B(S),\] and thence to a non-trivial map \[\omega_E^2\rightarrow \Omega_B(S).\] From this, one easily deduces Szpiro’s inequality: \[\deg (\omega_E) \leq (1/2)( 2g_B-2+|S|).\] At the most simple-minded level, one could say that Mochizuki’s programme has been concerned with replicating this argument over a number field \(F\). Since it has to do with differentiation on \(B\), which eventually turns into \(O_F\), some philosophical connection to \(\mathbb{F}_1\)-theory begins to appear. I will carry on using the same notation as above, except now \(B=Spec(O_F)\).

A large part of HAT is exactly concerned with the set-up necessary to implement this idea, where, roughly speaking, the Galois action has to play the role of the GM connection. Obviously, \(G_F\) doesn’t act on \(H^1_{DR}(E)\). But it does act on \(H^1_{et}(\bar{E})\) with various coefficients. The comparison between these two structures is the subject of \(p\)-adic Hodge theory, which sadly works only over local fields rather than a global one. But Mochizuki noted long ago that something like \(p\)-adic Hodge theory should be a key ingredient because over \(\mathbb{C}\), the comparison isomorphism \[H^1_{DR}(E)\simeq H^1(E(\mathbb{C}), \mathbb{Z})\otimes_{\mathbb{Z}} O_B\] allows us to completely recover the GM connection by the condition that the topological cohomology generates the flat sections.

In order to get a global arithmetic analogue, Mochizuki has to formulate a discrete non-linear version of the comparison isomorphism. What is non-linear? This is the replacement of \(H^1_{DR}\) by the universal extension \[E^{\dagger}\rightarrow E,\] (the moduli space of line bundles with flat connection on \(E\)) whose tangent space is \(H^1_{DR}\) (considerations of this nature already come up in usual p-adic Hodge theory). What is discrete is the 'etale cohomology, which will just be \(E[\ell]\) with global Galois action, where \(\ell\) can eventually be large, on the order of the height of \(E\) (that is \(\deg (\omega_E)\)). The comparison isomorphism in this context takes the following form: \[\Xi: A_{DR}=\Gamma(E^{\dagger}, L)^{<\ell}\simeq L|E[\ell]\simeq (L|e_{E})\otimes O_{E[\ell]}.\] (I apologize for using the notation \(A_{DR}\) for the space that Mochizuki denotes by a calligraphic \(H\). I can’t seem to write calligraphic characters here.) Here, \(L\) is a suitably chosen line bundle of degree \(\ell\) on \(E\), which can then be pulled back to \(E^{\dagger}\). The inequality refers to the polynomial degree in the fiber direction of \(E^{\dagger} \rightarrow E\). The isomorphism is effected via evaluation of sections at \[E^{\dagger}[\ell]\simeq E[\ell].\] Finally, \[ L|E[\ell]\simeq (L|e_{E})\otimes O_{E[\ell]}\] comes from Mumford’s theory of theta functions. The interpretation of the statement is that it gives an isomorphism between the space of functions of some bounded fiber degree on non-linear De Rham cohomology and the space of functions on discrete 'etale cohomology. This kind of statement is entirely due to Mochizuki. One sometimes speaks of \(p\)-adic Hodge theory with finite coefficients, but that refers to a theory that is not only local, but deals with linear De Rham cohomology with finite coefficients.

Now for some corrections: As stated, the isomorphism is not true, and must be modified at the places of bad reduction, the places dividing \(\ell\), and the infinite places. This correction takes up a substantial portion of the HAT paper. That is, the isomorphism is generically true over \(B\), but to make it true everywhere, the integral structures must be modified in subtle and highly interesting ways, while one must consider also a comparison of metrics, since these will obviously figure in an arithmetic analogue of Szpiro’s conjecture. The correction at the finite bad places can be interpreted via coordinates near infinity on the moduli stack of elliptic curves as the subtle phenomenon that Mochizuki refers to as ‘Gaussian poles’ (in the coordinate \(q\)). Since this is a superficial introduction, suffice it to say for now that these Gaussian poles end up being a major obstruction in this portion of Mochizuki’s theory.

In spite of this, it is worthwhile giving at least a small flavor of Mochizuki’s Galois-theoretic KS map. The point is that \(A_{DR}\) has a Hodge filtration defined by

$F^rA_{DR}= (E^{}, L)^{ < r} $

(the direction is unconventional), and this is moved around by the Galois action induced by the comparison isomorphism. So one gets thereby a map \[G_F\rightarrow Fil (A_{DR})\] into some space of filtrations on \(A_{DR}\). This is, in essence, the Galois-theoretic KS map. That, is if we consider the equivalence over \(\mathbb{C}\) of \(\pi_1\)-actions and connections, the usual KS map measures the extent to which the GM connection moves around the Hodge filtration. Here, we are measuring the same kind of motion for the \(G_F\)-action.

This is already very nice, but now comes a very important variant, essential for understanding the motivation behind the IUTT papers. In the paper GTKS, Mochizuki modified this map, producing instead a ‘Lagrangian’ version. That is, he assumed the existence of a Lagrangian Galois-stable subspace \(G^{\mu}\subset E[l]\) giving rise to another isomorphism \[\Xi^{Lag}:A_{DR}^{H}\simeq L\otimes O_{G^{\mu}},\] where \(H\) is a Lagrangian complement to \(G^{\mu}\), which I believe does not itself need to be Galois stable. \(H\) is acting on the space of sections, again via Mumford’s theory. This can be used to get another KS morphism to filtrations on \(A_{DR}^{H}\). But the key point is that

\(\Xi^{Lag}\), in contrast to \(\Xi\), is free of the Gaussian poles

via an argument I can’t quite remember (If I ever knew).

At this point, it might be reasonable to see if \(\Xi^{Lag}\) contributes towards a version of Szpiro’s inequality (after much work and interpretation), except for one small problem. A subspace like \(G^{\mu}\) has no reason to exist in general. This is why GTKS is mostly about the universal elliptic curve over a formal completion near \(\infty\) on the moduli stack of elliptic curves, where such a space does exists. What Mochizuki explains on IUTT page 10 is exactly that the scheme-theoretic motivation for IUG was to enable the move to a single elliptic curve over \(B=Spec(O_F)\), via the intermediate case of an elliptic curve ‘in general position’.

To repeat:

A good ‘nonsingular’ theory of the KS map over number fields requires a global Galois invariant Lagrangian subspace \(G^{\mu}\subset E[l]\).

One naive thought might just be to change base to the field generated by the \(\ell\)-torsion, except one would then lose the Galois action one was hoping to use. (Remember that Szpiro’s inequality is supposed to come from moving the Hodge filtration inside De Rham cohomology.) On the other hand, such a subspace does often exist locally, for example, at a place of bad reduction. So one might ask if there is a way to globally extend such local subspaces.

It seems to me that this is one of the key things going on in the IUTT papers I-IV. As he say in loc. cit. he works with various categories of collections of local objects that simulate global objects. It is crucial in this process that many of the usual scheme-theoretic objects, local or global, are encoded as suitable categories with a rich and precise combinatorial structure. The details here get very complicated, the encoding of a scheme into an associated Galois category of finite 'etale covers being merely the trivial case. For example, when one would like to encode the Archimedean data coming from an arithmetic scheme (which again, will clearly be necessary for Szpiro’s conjecture), the attempt to come up with a category of about the same order of complexity as a Galois category gives rise to the notion of a Frobenioid. Since these play quite a central role in Mochizuki’s theory, I will quote briefly from his first Frobenioid paper:

‘Frobenioids provide a single framework [cf. the notion of a “Galois category”; the role of monoids in log geometry] that allows one to capture the essential aspects of both the Galois and the divisor theory of number fields, on the one hand, and function fields, on the other, in such a way that one may continue to work with, for instance, global degrees of arithmetic line bundles on a number field, but which also exhibits the new phenomenon [not present in the classical theory of number fields] of a “Frobenius endomorphism” of the Frobenioid associated to a number field.’

I believe the Frobenioid associated to a number field is something close to the finite 'etale covers of \(Spec(O_F)\) (equipped with some log structure) together with metrized line bundles on them, although it’s probably more complicated. The Frobenious endomorphism for a prime \(p\) is then something like the functor that just raises line bundles to the \(p\)-th power. This is a functor that would come from a map of schemes if we were working in characteristic \(p\), but obviously not in characteristic zero. But this is part of the reason to start encoding in categories:

We get more morphisms and equivalences.

Some of you will notice at this point the analogy to developments in algebraic geometry where varieties are encoded in categories, such as the derived category of coherent sheaves. There as well, one has reconstruction theorems of the Orlov type, as well as the phenomenon of non-geometric morphisms of the categories (say actions of braid groups). Non-geometric morphisms appear to be very important in Mochizuki’s theory, such as the Frobenius above, which allows us to simulate characteristic \(p\) geometry in characteristic zero. Another important illustrative example is a non-geometric isomorphism between Galois groups of local fields (which can’t exist for global fields because of the Neukirch-Uchida theorem). In fact, I think Mochizuki was rather fond of Ihara’s comment that the positive proof of the anabelian conjecture was somewhat of a disappointment, since it destroys the possibility that encoding curves into their fundamental groups will give rise to a richer category. Anyways, I believe the importance of non-geometric maps of categories encoding rather conventional objects is that

they allow us to glue together several standard categories in nonstandard ways.

Obviously, to play this game well, some things need to be encoded in rigid ways, while others should have more flexible encodings.

For a very simple example that gives just a bare glimpse of the general theory, you might consider a category of pairs \[(G,F),\] where \(G\) is a profinite topological group of a certain type and \(F\) is a filtration on \(G\). It’s possible to write down explicit conditions that ensure that \(G\) is the Galois group of a local field and \(F\) is its ramification filtration in the upper numbering (actually, now I think about it, I’m not sure about ‘explicit conditions’ for the filtration part, but anyways). Furthermore, it is a theorem of Mochizuki and Abrashkin that the functor that takes a local field to the corresponding pair is fully faithful. So now, you can consider triples \[(G,F_1, F_2),\] where \(G\) is a group and the \(F_i\) are two filtrations of the right type. If \(F_1=F_2\), then this ‘is’ just a local field. But now you can have objects with \(F_1\neq F_2\), that correspond to strange amalgams of two local fields.

As another example, one might take a usual global object, such as \[ (E, O_F, E[l], V)\] (where \(V\) denotes a collection of valuations of \(F(E[l])\) that restrict bijectively to the valuations \(V_0\) of \(F\)), and associate to it a collection of local categories indexed by \(V_0\) (something like Frobenioids corresponding to the \(E_v\) for \(v\in V_0\)). One can then try to glue them together in non-standard ways along sub-categories, after performing a number of non-standard transformations. My rough impression at the moment is that the ‘Hodge theatres’ arise in this fashion. [This is undoubtedly a gross oversimplification, which I will correct in later amendments.] You might further imagine that some construction of this sort will eventually retain the data necessary to get the height of \(E\), but also have data corresponding to the \(G^{\mu}\), necessary for the Lagrangian KS map. In any case, I hope you can appreciate that a good deal of ‘dismantling’ and ‘reconstructing,’ what Mochizuki calls surgery, will be necessary.

I can’t emphasize enough times that much of what I write is based on faulty memory and guesswork. At best, it is superficial, while at worst, it is (not even) wrong. [In particular, I am no longer sure that the GTKS map is used in an entirely direct fashion.] I have not yet done anything with the current papers than give them a cursory glance. If I figure out more in the coming weeks, I will make corrections. But in the meanwhile, I do hope what I wrote here is mostly more helpful than misleading.

Allow me to make one remark about set theory, about which I know next to nothing. Even with more straightforward papers in arithmetic geometry, the question sometimes arises about Grothendieck’s universe axiom, mostly because universes appear to be used in SGA4. Usually, number-theorists (like me) neither understand, nor care about such foundational matters, and questions about them are normally met with a shrug. The conventional wisdom of course is that any of the usual theorems and proofs involving Grothendieck cohomology theories or topoi do not actually rely on the existence of universes, except general laziness allows us to insert some reference that eventually follows a trail back to SGA4. However, this doesn’t seem to be the case with Mochizuki’s paper. That is, universes and interactions between them seem to be important actors rather than conveniences. How this is really brought about, and whether more than the universe axiom is necessary for the arguments, I really don’t understand enough yet to say. In any case, for a number-theorist or an algebraic geometer, I would guess it’s still prudent to acquire a reasonable feel for the ‘usual’ background and motivation (that is, HAT, GTKS, and anabelian things) before worrying too much about deeper issues of set theory.

Answer 3 (score 85)

Last revision: 10/20. (Probably the last for at least some time to come: until Mochizuki uploads his revisions of IUTT-III and IUTT-IV. My apology for the multiple revisions. )

Completely rewritten. (9/26)

It seems indeed that nothing like Theorem 1.10 from Mochizuki’s IUTT-IV could hold.

Here is an infinite set of counterexamples, assuming for convenience two standard conjectures (the first being in fact a consequence of ABC), that contradict Thm. 1.10 very badly.

Assumptions:

-

A (Consequence of ABC) For all but finitely many elliptic curves over \(\mathbb{Q}\), the conductor \(N\) and the minimal discriminant \(\Delta\) satisfy \(\log{|\Delta|} < (\log{N})^2\).

-

B (Uniform Serre Open Image conjecture) For each \(d \in \mathbb{N}\), there is a constant \(c(d) < \infty\) such that for every number field \(F/\mathbb{Q}\) with \([F:\mathbb{Q}] \leq d\), and every non-CM elliptic curve \(E\) over \(F\), and every prime \(\ell \geq c(d)\), the Galois representation of \(G_F\) on \(E[\ell]\) has full image \(\mathrm{GL}_2(\mathbb{Z}/{\ell})\). (In fact, it is sufficient to take the weaker version in which \(F\) is held fixed. )

Further, as far as I can tell from the proof of Theorem 1.10 of IUTTIV, the only reason for taking \(F := F_{\mathrm{tpd}}\big( \sqrt{-1}, E_{F_{\mathrm{tpd}}}[3\cdot 5] \big)\) — rather than simply \(F := F_{\mathrm{tpd}}(\sqrt{-1})\) — was to ensure that \(E\) has semistable reduction over \(F\). Since I will only work in what follows with semistable elliptic curves over \(\mathbb{Q}\), I will assume, for a mild technical convenience in the examples below, that for elliptic curves already semistable over \(F_{\mathrm{tpd}}\), we may actually take \(F := F_{\mathrm{tpd}}(\sqrt{-1})\) in Theorem 1.10.

The infinite set of counterexamples. They come from Masser’s paper [Masser: Note on a conjecture of Szpiro, Asterisque 1990], as follows. Masser has produced an infinite set of Frey-Hellougarch (i.e., semistable and with rational 2-torsion) elliptic curves over \(\mathbb{Q}\) whose conductor \(N\) and minimal discriminant \(\Delta\) satisfy \[ (1) \hspace{3cm} \frac{1}{6}\log{|\Delta|} \geq \log{N} + \frac{\sqrt{\log{N}}}{\log{\log{N}}}. \] (Thus, \(N\) in these examples may be taken arbitrarily large. ) By (A) above, taking \(N\) big enough will ensure that \[ (2) \hspace{3cm} \log{|\Delta|} < (\log{N})^2. \] Next, the sum of the logarithms of the primes in the interval \(\big( (\log{N})^2, 3(\log{N})^2 \big)\) is \(2(\log{N})^2 + o((\log{N})^2)\), so it is certainly \(> (\log{N})^2\) for \(N \gg 0\) big enough. Thus, by (2), it is easy to see that the interval \(\big( (\log{N})^2, 3(\log{N})^2 \big)\) contains a prime \(\ell\) which divides neither \(|\Delta|\) nor any of the exponents \(\alpha = \mathrm{ord}_p(\Delta)\) in the prime factorization \(|\Delta| = \prod p^{\alpha}\) of \(|\Delta|\).

Consider now the pair \((E,\ell)\): it has \(F_{\mathrm{mod}} = \mathbb{Q}\), and since \(E\) has rational \(2\)-torsion, \(F_{\mathrm{tpd}} = \mathbb{Q}\) as well. Let \(F := \mathbb{Q} \big( \sqrt{-1}\big)\). I claim that, upon taking \(N\) big enough, the pair \((E_F,\ell)\) arises from an initial \(\Theta\)-datum as in IUTT-I, Definition 3.1. Indeed:

- Certainly (a), (e), (f) of IUTT-I, Def. 3.1 are satisfied (with appropriate \(\\underline{\mathbb{V}}, \, \\underline{\epsilon}\));

-

- of IUTT-I, Def. 3.1 is satisfied since by construction \(E\) is semistable over \(\mathbb{Q}\);

-

- of IUTT-I, Def. 3.1 is satisfied, in view of (B) above and the choice of \(\ell\), as soon as \(N \gg 0\) is big enough (recall that \(\ell > (\log{N})^2\) by construction!), and by the observation that, for \(v\) a place of \(F = \mathbb{Q}(\sqrt{-1})\), the order of the \(v\)-adic \(q\)-parameter of \(E\) equals \(\mathrm{ord}_v (\Delta)\), which equals \(\mathrm{ord}_p(\Delta)\) for \(v \mid p > 2\), and \(2\cdot\mathrm{ord}_2(\Delta)\) for \(v \mid 2\);

while \(\mathbb{V}_{\mathrm{mod}}^{\mathrm{bad}}\) consists of the primes dividing \(\Delta\);

- Finally, (d) of IUTT-I, Def. 3.1 is satisfied upon excluding at most four of Masser’s examples \(E\). (See page 37 of IUTT-IV).

Now, take \(\epsilon := \big( \log{N} \big)^{-2}\) in Theorem 1.10 of IUTT-IV; this is certainly permissible for \(N \gg 0\) large enough. I claim that the conclusion of Theorem 1.10 contradicts (1) as soon as \(N \gg 0\) is large enough.

For note that Mochizuki’s quantity \(\log(\mathfrak{q})\) is precisely \(\log{|\Delta|}\) (reference: see e.g. Szpiro’s article in the Grothendieck Festschrift, vol. 3); his \(\log{(\mathfrak{d}^{\mathrm{tpd}})}\) is zero; his \(d_{\mathrm{mod}}\) is \(1\); and his \(\log{(\mathfrak{f}^{\mathrm{tpd}})}\) is our \(\log{N}\). By construction, our choice \(\epsilon := \big( \log{N} \big)^{-2}\) then makes \(1/\ell < \epsilon\) and \(\ell < 3/\epsilon\), whence the finaly display of Theorem 1.10 would yield \[ \frac{1}{6} \log{|\Delta|} \leq (1+29\epsilon) \cdot \log{N} + 2\log{(3\epsilon^{-8})} < \log{N} + 16\log{\log{N}} + 32, \] where we have used \(\epsilon \log{N} = (\log{N})^{-1} < 1\) for \(N > 3\), and \(2\log{3} < 3\).

The last display contradicts (1) as soon as \(N \gg 0\) is big enough.

Thus Masser’s examples yield infinitely many counterexamples to Theorem 1.10 of IUTT-IV (as presently written).

Added on 10/15, and revised 10/20. Mochizuki has commented on the apparent contradiction between Masser’s examples and Theorem 1.10:

He writes that he will revise portions of IUTT-III and IUTT-IV, and will make them available in the near future. (He estimates January 2013 to be a reasonable period). He confirms the following [“essentially”] anticipated revision of Theorem 1.10:

Let \(E/\mathbb{Q}\) be a semistable elliptic curve with [say, for the sake of simplifying] rational \(2\)-torsion [i.e., a Frey-Hellegouarch curve] of minimal discriminant \(\Delta\) and conductor \(N\) (square-free). For \(\epsilon > 0\), let \(N_{\epsilon} := \prod_{p \mid N, p < \epsilon^{-1}} p\). Then: \[ \frac{1}{6} \log{|\Delta|} < \big( 1 + \epsilon \big) \log{N} + \Big( \omega(N_{\epsilon}) \cdot \log{(1/\epsilon)} - \log{N_{\epsilon}} \Big) + O\big( \log{(1/\epsilon)} \big) \] \[ < \log{N} + \Big( \epsilon \log{N} + \big( \epsilon \log{(1/\epsilon)} \big)^{-1} \Big) + o\Big( \big( \epsilon \log{(1/\epsilon)} \big)^{-1} \Big), \] where \(\omega(\cdot)\) denotes “number of prime factors.” The second estimate comes from the prime number theorem in the form \(\pi(t) = t/\log{t} + t/(\log{t})^2 + o\big( t/(\log{t})^2 \big)\), applied to \(t := \epsilon^{-1}\), and is sharp if you restrict \(\epsilon\) to the range \(\epsilon^{-1} < (\log{N})^{\xi}\) with \(\xi < 1\), as there nothing prevents \(N\) from being divisible by all primes \(p < (\log{N})^{\xi}\). In particular, as the Erdos-Stewart-Tijdeman-Masser construction is based on the pigeonhole principle, which cannot preclude that \(N\) be divisible by all the primes \(< (\log{N})^{2/3}\), the second estimate could very well be sharp in all the Masser examples. As it is easily seen that the bracketed term exceeds the range \(\sqrt{\log{N}}/(\log{\log{N}})\) of Masser’s examples, this has the implication that

the Erdos-Stewart-Tijdeman-Masser method cannot disprove Mochizuki’s revised inequality,

which therefore seems reasonable.

On the other hand, if we take \(\epsilon := (\log{N})^{-1}\) and assume \(\omega(N_{\epsilon})\) bounded, this would yield \((1/6)\log{|\Delta|} < \log{N} + O(\log{\log{N}})\), just as before. (Thus, Mochizuki predicts that this last bound must hold for \(N\) a large enough square-free integer such that the number of primes \(< \log{N}\) dividing \(N\) is bounded. I cannot see evidence neither for nor against this at the moment: again, the Masser and Erdos-Stewart-Tijdeman constructions are based on the pigeonhole principle, and do not seem to be able to exclude the small primes \(< \log{N}\). So here we have an open problem by which one could probe Mochizuki’s revised inequality. A reminder: in terms of the \(abc\)-triple, \(\Delta\) is essentially \((abc)^2\), and \(N = \mathrm{rad}(abc)\)).

A side remark: note that the inverse \(1/\ell\) of the prime level from the de Rham-Etale correspondence \((E^{\dagger}, < \ell) \leftrightarrow E[\ell]\) in Mochizuki’s “Hodge-Arakelov theory” ultimately figures as the \(\epsilon\) in the ABC conjecture.

[I have deleted the remainder of the 10/15 Addendum, since it is now obsolete after Mochizuki’s revised comments. ]

8: Video lectures of mathematics courses available online for free (score 120012 in 2017)

Question

It can be difficult to learn mathematics on your own from textbooks, and I often wish universities videotaped their mathematics courses and distributed them for free online. Fortunately, some universities do that (albeit to a very limited extent), and I hope we can compile here a list of all the mathematics courses one can view in their entirety online.

Please only post videos of entire courses; that is, a speaker giving one lecture introducing a subject to the audience should be off-limits, but a sequence of, say, 30 hour-long videos, each of which is a lecture delivered in a class would be very much on-topic.

Answer accepted (score 60)

Ted Chinburg has videos of his lectures for what is going on a 2 year course in algebraic number theory online( direct links to videos: semester 1, semester 2, semester 3, semester 4), and from there you can also get lectures from various seminars at Penn.

Also, there’s the MSRI database for all the things that go on there, they’re all over the website at each program’s site.

Answer 2 (score 50)

Answer 3 (score 45)

The lecture videos of Introduction to Abstract Algebra, taught by Benedict Gross at Harvard, can be downloaded here.

9: Best Algebraic Geometry text book? (other than Hartshorne) (score 118267 in 2011)

Question

I think (almost) everyone agrees that Hartshorne’s Algebraic Geometry is still the best.

Then what might be the 2nd best? It can be a book, preprint, online lecture note, webpage, etc.

One suggestion per answer please. Also, please include an explanation of why you like the book, or what makes it unique or useful.

Answer accepted (score 180)

I think Algebraic Geometry is too broad a subject to choose only one book. Maybe if one is a beginner then a clear introductory book is enough or if algebraic geometry is not ones major field of study then a self-contained reference dealing with the important topics thoroughly is enough. But Algebraic Geometry nowadays has grown into such a deep and ample field of study that a graduate student has to focus heavily on one or two topics whereas at the same time must be able to use the fundamental results of other close subfields. Therefore I find the attempt to reduce his/her study to just one book (besides Hartshorne’s) too hard and unpractical. That is why I have collected what in my humble opinion are the best books for each stage and topic of study, my personal choices for the best books are then:

-

CLASSICAL: Beltrametti-Carletti-Gallarati-Monti. “Lectures on Curves, Surfaces and Projective Varieties” which starts from the very beginning with a classical geometric style. Very complete (proves Riemann-Roch for curves in an easy language) and concrete in classic constructions needed to understand the reasons about why things are done the way they are in advanced purely algebraic books. There are very few books like this and they should be a must to start learning the subject. (Check out Dolgachev’s review.)

-

HALF-WAY/UNDERGRADUATE: Shafarevich - “Basic Algebraic Geometry” vol. 1 and 2. They may be the most complete on foundations for varieties up to introducing schemes and complex geometry, so they are very useful before more abstract studies. But the problems are hard for many beginners. They do not prove Riemann-Roch (which is done classically without cohomology in the previous recommendation) so a modern more orthodox course would be Perrin’s “Algebraic Geometry, An Introduction”, which in fact introduce cohomology and prove RR.

-

ADVANCED UNDERGRADUATE: Holme - “A Royal Road to Algebraic Geometry”. This new title is wonderful: it starts by introducing algebraic affine and projective curves and varieties and builds the theory up in the first half of the book as the perfect introduction to Hartshorne’s chapter I. The second half then jumps into a categorical introduction to schemes, bits of cohomology and even glimpses of intersection theory.

-

ONLINE NOTES: Gathmann - “Algebraic Geometry” which can be found here. Just amazing notes; short but very complete, dealing even with schemes and cohomology and proving Riemann-Roch and even hinting Hirzebruch-R-R. It is the best free course in my opinion, to get enough algebraic geometry background to understand the other more advanced and abstract titles. For an abstract algebraic approach, a freely available online course is available by the nicely done new long notes by R. Vakil.

-

GRADUATE FOR ALGEBRISTS AND NUMBER THEORISTS: Liu Qing - “Algebraic Geometry and Arithmetic Curves”. It is a very complete book even introducing some needed commutative algebra and preparing the reader to learn arithmetic geometry like Mordell’s conjecture, Faltings’ or even Fermat-Wiles Theorem.

-

GRADUATE FOR GEOMETERS: Griffiths; Harris - “Principles of Algebraic Geometry”. By far the best for a complex-geometry-oriented mind. Also useful coming from studies on several complex variables or differential geometry. It develops a lot of algebraic geometry without so much advanced commutative and homological algebra as the modern books tend to emphasize.

-

BEST ON SCHEMES: Görtz; Wedhorn - Algebraic Geometry I, Schemes with Examples and Exercises. Tons of stuff on schemes; more complete than Mumford’s Red Book (For an online free alternative check Mumfords’ Algebraic Geometry II unpublished notes on schemes.). It does a great job complementing Hartshorne’s treatment of schemes, above all because of the more solvable exercises.

-

UNDERGRADUATE ON ALGEBRAIC CURVES: Fulton - “Algebraic Curves, an Introduction to Algebraic Geometry” which can be found here. It is a classic and although the flavor is clearly of typed concise notes, it is by far the shortest but thorough book on curves, which serves as a very nice introduction to the whole subject. It does everything that is needed to prove Riemann-Roch for curves and introduces many concepts useful to motivate more advanced courses.

-

GRADUATE ON ALGEBRAIC CURVES: Arbarello; Cornalba; Griffiths; Harris - “Geometry of Algebraic Curves” vol 1 and 2. This one is focused on the reader, therefore many results are stated to be worked out. So some people find it the best way to really master the subject. Besides, the vol. 2 has finally appeared making the two huge volumes a complete reference on the subject.

-

INTRODUCTORY ON ALGEBRAIC SURFACES: Beauville - “Complex Algebraic Surfaces”. I have not found a quicker and simpler way to learn and clasify algebraic surfaces. The background needed is minimum compared to other titles.

-

ADVANCED ON ALGEBRAIC SURFACES: Badescu - “Algebraic Surfaces”. Excellent complete and advanced reference for surfaces. Very well done and indispensable for those needing a companion, but above all an expansion, to Hartshorne’s chapter.

-

ON HODGE THEORY AND TOPOLOGY: Voisin - Hodge Theory and Complex Algebraic Geometry vols. I and II. The first volume can serve almost as an introduction to complex geometry and the second to its topology. They are becoming more and more the standard reference on these topics, fitting nicely between abstract algebraic geometry and complex differential geometry.

-

INTRODUCTORY ON MODULI AND INVARIANTS: Mukai - An Introduction to Invariants and Moduli. Excellent but extremely expensive hardcover book. When a cheaper paperback edition is released by Cambridge Press any serious student of algebraic geometry should own a copy since, again, it is one of those titles that help motivate and give conceptual insights needed to make any sense of abstract monographs like the next ones.

-

ON MODULI SPACES AND DEFORMATIONS: Hartshorne - “Deformation Theory”. Just the perfect complement to Hartshorne’s main book, since it did not deal with these matters, and other books approach the subject from a different point of view (e.g. geared to complex geometry or to physicists) than what a student of AG from Hartshorne’s book may like to learn the subject.

-

ON GEOMETRIC INVARIANT THEORY: Mumford; Fogarty; Kirwan - “Geometric Invariant Theory”. Simply put, it is still the best and most complete. Besides, Mumford himself developed the subject. Alternatives are more introductory lectures by Dolgachev.

-

ON INTERSECTION THEORY: Fulton - “Intersection Theory”. It is the standard reference and is also cheap compared to others. It deals with all the material needed on intersections for a serious student going beyond Hartshorne’s appendix; it is a good reference for the use of the language of characteristic classes in algebraic geometry, proving Hirzebruch-Riemann-Roch and Grothendieck-Riemann-Roch among many interesting results.

-